Introduction

The use of LLMs in the creation of chatbots is old news. However, there are more use for LLMs than text generation in the context of the development of conversational agents. One of the main challenges of creating a conversational agent is text classification for intent detection. With the use of models, such as OpenAi’s GPT3.5 or Google’s Palm2, this task becomes trivial.

Conversational agent framework

The difference between a chatbot(e.g. ChatGPT) and a conversational agent(e.g. Siri or Alexa) is a chatbot uses a text generation model to create a conversation with the user, while a conversational agent has a series of functions it can call and it tries to understand what the user wants to accomplish.

For example, I might say “Siri, play Peaches by Justin Bieber”, and my phone will know that it need to call a function that open the Spotify app and play the first result for the given query(“Peaches” by Justin Bieber).

| Chatbots | (Task-based) Dialog Agents |

|---|---|

| • mimic informal human chatting • for fun, or even for therapy | • interfaces to personal assistants • cars, robots, appliances • booking flights or restaurants • actionable |

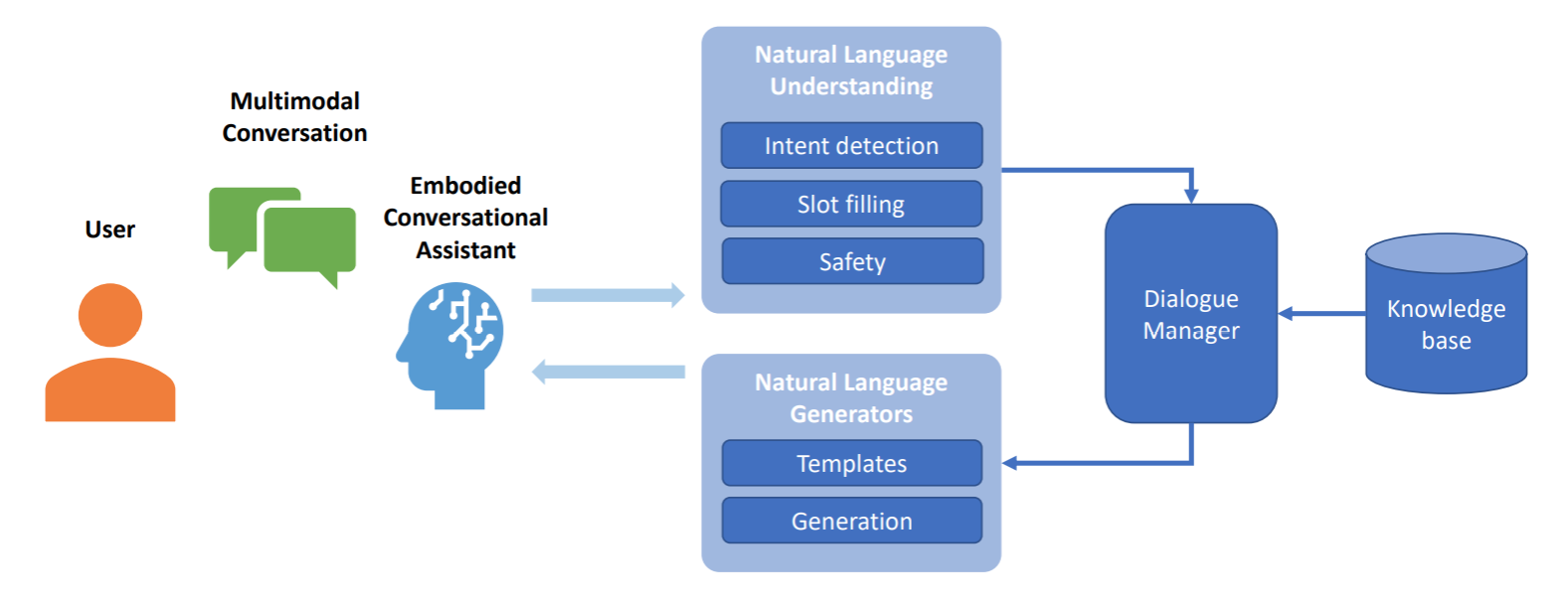

A conventional conversational agent is composed of three main parts: NLU, NLG and DM.

Natural Language Understanding: parses the user’s utterance and extracts using machine learning.

Dialogue Manager: controls the flow of the conversation.

Natural Language Generation: produce more natural, less templated utterances

Knowledge-Base: information or products that feed the conversation.

What is intent detection? Why should we use it?

Intent detection is the process of using a text classification model in order to classify the act that the user wishes the assistant to accomplish. This is an important feauture to implement in a conversational agent, because there are many ways a user can ask to perform the same task:

- Could you kindly play “Peaches” by Justin Bieber, please?

- I’d really appreciate it if you could put on “Peaches” by Justin Bieber.

- Would you mind playing “Peaches” by Justin Bieber for me?

- I’d love to hear “Peaches” by Justin Bieber. Could you play it, please?

- If it’s possible, could you play Justin Bieber’s “Peaches” for me?

- I’m really in the mood for “Peaches” by Justin Bieber. Could you play it, please?

- Would you be so kind as to play “Peaches” by Justin Bieber?

- Can you add “Peaches” by Justin Bieber to the playlist, please?

- I’d be grateful if you could play Justin Bieber’s song “Peaches.”

- If it’s not too much trouble, could you play “Peaches” by Justin Bieber?

If we simply program our agent to call the function if the user input is “Play Peaches by Justin Bieber”, the accuracy of our software will be terrible. Therefore we need to use AI to analyse the users input and understand the act.

We could use HuggingFace and a text classification model such as distilbert-base-uncased-finetuned-sst-2-english, but eventhough this model is already pre-trained, we would still need to fine-tune it for classifying intent for our specific application. This process requires a lot of data(usually, the more the better). But what do we do if we are creating a small research project or our boss did not give us enough time to collect data from our users. In that case, using a LLM trained on trillions of parameters can provide us with predictions that are better than a model like BERT.

How to create the right prompt

To create the ideal prompt, we first create a list of possible intents. These can be very different depending on what kind of app we wish to build. For example, if we want to buid a dialog agent for a clothing shop, we could have a list of intents like:

user_get_product

user_get_product_info

user_get_product_reviews

user_get_product_brand

user_get_product_size

We should also add some general purpose intents, which will help improve user experience.

user_neutral_greeting

user_neutral_goodbye

user_neutral_tell_me_a_joke

user_neutral_meaning_of_life

user_neutral_out_of_scope

The “out_of_scope” intent is very important, since it’s used to catch any request from the user that make sense in the context of the application.

Clothing store example

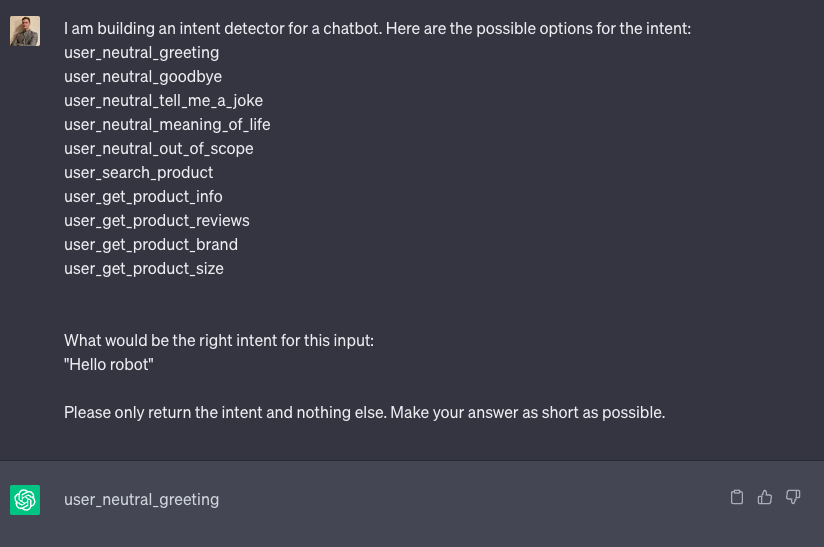

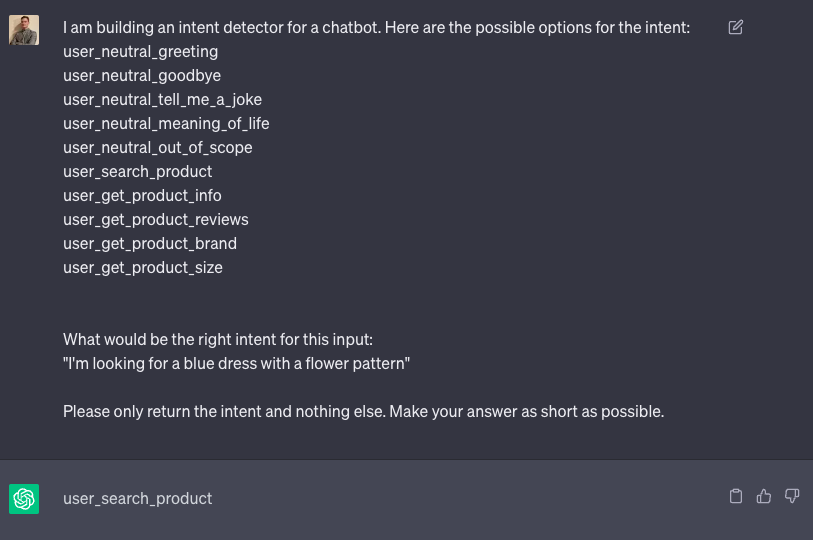

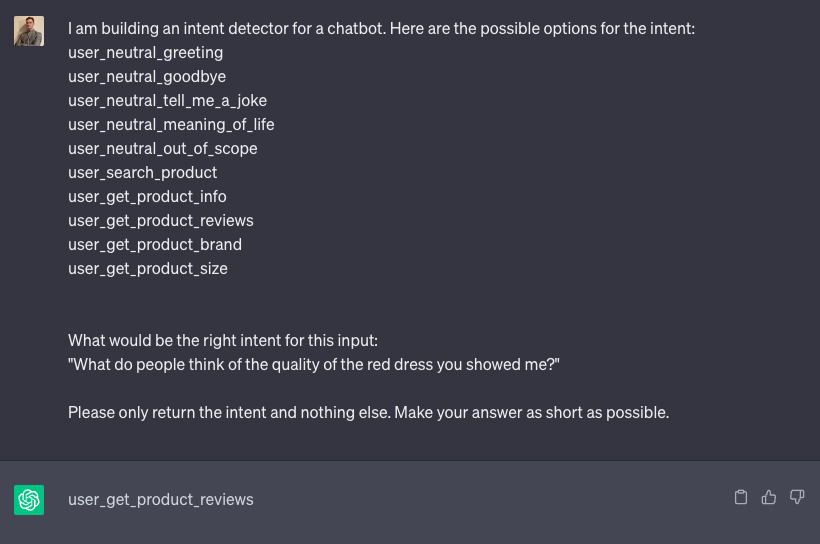

An example of a prompt for a intent detector for a clothing shop would be:

I am building an intent detector for a chatbot. Here are the possible options for the intent:

user_neutral_greeting

user_neutral_goodbye

user_neutral_tell_me_a_joke

user_neutral_meaning_of_life

user_neutral_out_of_scope

user_search_product

user_get_product_info

user_get_product_reviews

user_get_product_brand

user_get_product_size

What would be the right intent for this input:

“{prompt}”

Please only return the intent and nothing else. Make your answer as short as possible.

If we test this using ChatGPT, we can already get some pretty good results.

Conclusion

You should try to change the prompt in small ways and test the accuracy of different LLMs in your application. Also you can keep adding different intents as you add more functionalities to your app.